So, you all know the deal… When it comes to coding there is one rule that all half decent programmers aspire to; Nope, it’s not ‘Never toss a dwarf’ which whilst being reasonably good advice is probably not quite relevant to normal weekly challenges at the coal face of code. It is this, Keep your code DRY

And we do, we really do. We all go to huge lengths using interfaces and the like to ensure that the same operations can be leveraged against differing, but similar kinds of objects. However when it comes to using SSIS for ETL work it always seems that all of these principles go flying out of the door, at least it seems to for me. SSIS is not best suited for DRY, I have lost count of the number of times I have defined workflows like this:-

So, my challenge was to import ‘n’ identically formatted Excel files (with different data) into the same database table after adding a few snippets of data. In the screenshot above I have only shown you two of the excel files to import, the client wanted 12 today, but up to 30 at some point in the future. Hmmmmm, so we discussed the matter further and it then became obvious that sometimes, just sometimes, they would only provide 1 file or maybe even none. It quickly became apparent that my clumsy transformation as defined above would not have worked for all of the following reasons:-

- Defining 30 import files would be lots of work, and they would then need to be merged together.

- Defining 30 files and then NONE of them, or just one of them resolving would have made the transformation fall over at run time with metadata issues.

- What if the client suddenly decided that they want 1000 files. I am NOT going to manually set all of those up

- I would also have to define 1 connection for each file…..

- There’s no flexibility. if they needed to support 30 files one week and ten the following as things stand we would have two different packages (or routines within the package)

- Most importantly, it violates DRY principles. Thus if I ever needed the change the way SQL was selecting data from the files I would need to make it to 30 transformations, or more even!

So, I had a drawing board to get back to…. Now as a seasoned SSIS user I must admit that I have never looked to deeply into some of the control flow components, topmost amongst these is the ForEachLoop Container

With a little bit of research I realised that this was exactly what I needed so without further ado I decided to implement a solution for my challenge using this component.I started at the start of course by firstly defining a new Excel connection (and ONLY one!) I set it to a file that represents the data that would be loaded at run time for each file loaded, remember that all of my import files are identical in format.

I would also need a couple of variables to allow me to store the Directory I am interrogating and to store the name of the file currently being processed in the Foreach Loop. These were defined like so:-

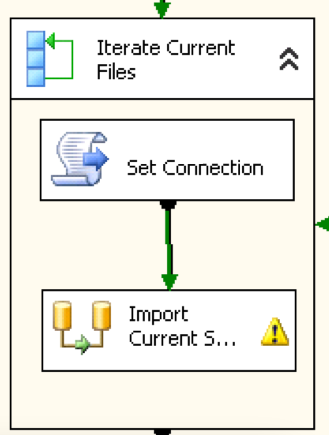

Now I could start to play with my Foreach Loop, I dragged a new component onto my Control Flow surface and then added a script component (more of that later) and my own workflow. The result was this little transformation process:-

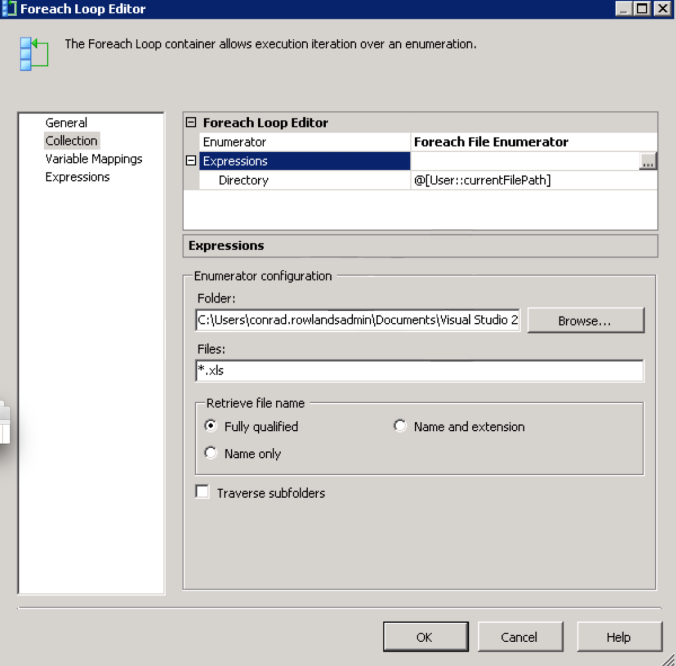

I then configured the Foreach Loop thus using the ‘Collections’ option :-

As you can see I have created ForEach File enumerator which determines exactly what sort of behaviour this component will perform, Note how I have then created an Expression (using the expressions Editor Dialog) which allowed me to specify ‘Directory’ as the Expression to set and the value that it is being set to is the value stored in my ‘currentFilepath’ Variable. I could of course have just hard-coded the value by just using the Browse dialog further down the screen. In my instance I only want to use ‘xls’ file, or office 2003 format Excel files and so using the ‘Files’ field I can specify only to use *.xls files. Finally, I want all of the file paths returned to me to be Fully Qualified Path names and so that it what I have selected.

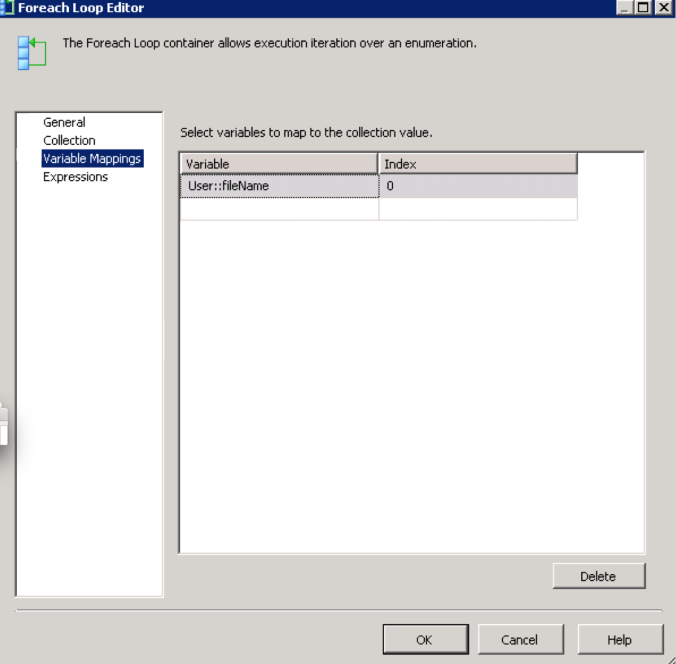

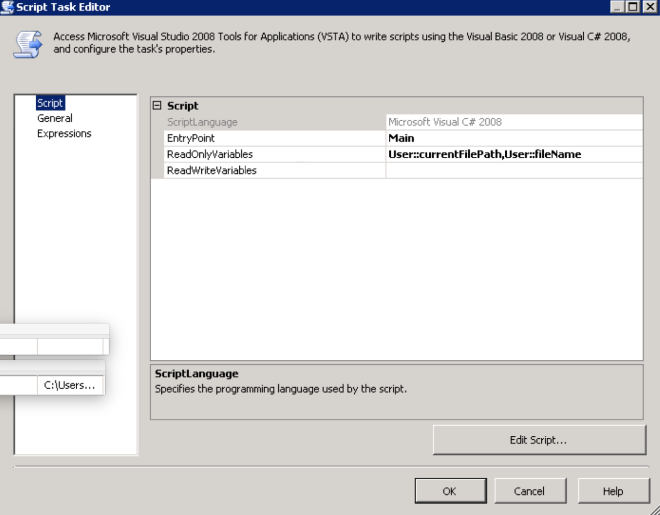

Then I needed to configure just what I should do with the value returned. In my instance I wanted to read the value (the fully qualified file name) into a variable for later use. We set that up using the ‘Variable Mappings’ as below  As you can see we are setting the output result (with files index 0 is the ONLY option) into our ‘fileName ‘ variable. Once this has been done we have one job left to do, We need to programmatically change the connection string of our ‘Current Financial’ connection to point to the file we are currently processing. This is what we use the script component for as mentioned earlier. So firstly we need to tell the script component what user variables it has access to:-

As you can see we are setting the output result (with files index 0 is the ONLY option) into our ‘fileName ‘ variable. Once this has been done we have one job left to do, We need to programmatically change the connection string of our ‘Current Financial’ connection to point to the file we are currently processing. This is what we use the script component for as mentioned earlier. So firstly we need to tell the script component what user variables it has access to:-

Finally, we can now get to the scripting which is simplicity itself:-

Finally, we can now get to the scripting which is simplicity itself:-

As you can see all we really do is obtain references to the Connection object and the ‘fileName’ variable (both by name). We then create a valid Connection string using our new file name and finally we set this new connection string into the ConnectionString property of the Connection object.

As you can see all we really do is obtain references to the Connection object and the ‘fileName’ variable (both by name). We then create a valid Connection string using our new file name and finally we set this new connection string into the ConnectionString property of the Connection object.

That’s it, we’re done and now running the code we can see that it doesn’t matter whether there are zero or a million files within the directory it will process them (in no particular order) and add them to our staging table ready for full adoption onto the system. I’m pleased with this solution, its DRY’d my transformations up nicely and given me the flexibility I would not have been able to achieve any other way.